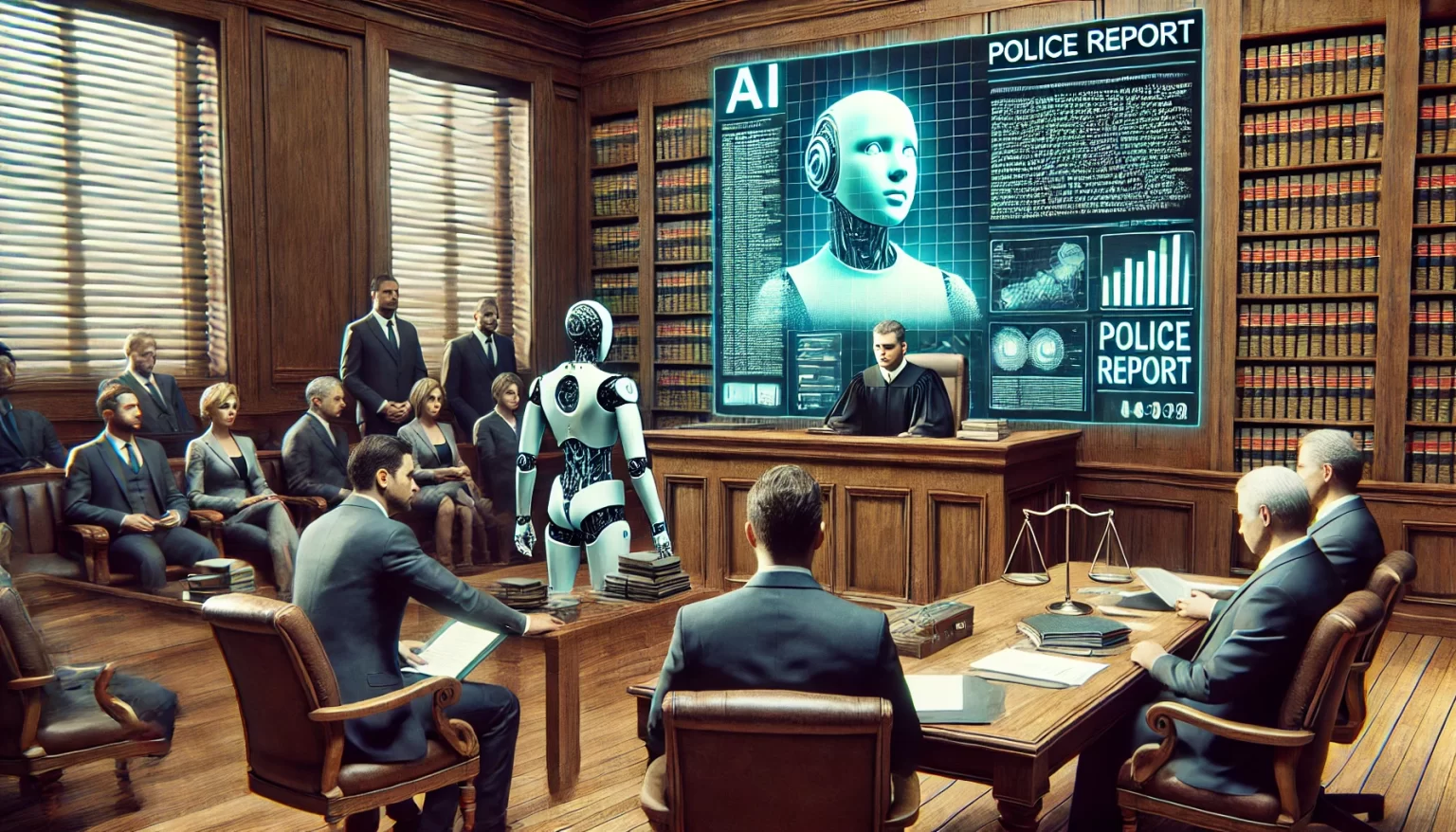

In the ever-evolving landscape of law enforcement, technology continues to play a crucial role in shaping how police officers carry out their duties. Recently, the introduction of AI chatbots for generating police reports has sparked significant interest—and concern—within the forensic science

AI-Generated Police Reports: A New Era in Law Enforcement

Oklahoma City Police Sgt. Matt Gilmore recently experienced firsthand the transformative potential of AI in law enforcement. During a routine search operation, his body camera captured audio and radio chatter, which was then processed by an AI chatbot to generate a detailed incident report. The AI-generated report was completed in just eight seconds—a task that would typically take Sgt. Gilmore up to 45 minutes. Remarkably, the report was not only faster but also more accurate, even documenting details the officer himself had overlooked.

This AI tool, developed by Axon, the company behind the widely used Taser and police body cameras, represents a significant shift in how police work is documented. Named “Draft One,” the tool is built on the same generative AI technology that powers ChatGPT. However, this advancement raises critical questions about the reliability of AI-generated reports in legal proceedings.

Legal Concerns and the Integrity of AI-Generated Reports

While the speed and efficiency of AI-generated reports have impressed many in law enforcement, legal scholars, prosecutors, and police watchdogs are more cautious. The primary concern is whether these reports will hold up in court, especially when an officer may need to testify about what they witnessed.

District attorneys have expressed the need for clear guidelines to ensure that AI does not replace the critical human element in police reporting. They worry that an officer’s reliance on AI could lead to situations where the officer might say, “The AI wrote that, I didn’t,” which could undermine the credibility of the report and the officer’s testimony.

Moreover, the technology’s ability to accurately capture and convey details without introducing errors, known as “hallucinations” in AI parlance, is still an open question. While AI can process vast amounts of data Information in analog or digital form that can be transmitted or processed. Read Full Definition quickly, it is prone to generating plausible-sounding but false information. This raises the stakes for ensuring that AI-generated reports are thoroughly vetted by human officers before being submitted as part of a legal process.

Information in analog or digital form that can be transmitted or processed. Read Full Definition quickly, it is prone to generating plausible-sounding but false information. This raises the stakes for ensuring that AI-generated reports are thoroughly vetted by human officers before being submitted as part of a legal process.

AI in Policing: A Double-Edged Sword?

AI technology is not new to law enforcement. From facial recognition to predictive policing, AI has been integrated into various aspects of police work. However, each new application brings with it concerns about biasThe difference between the expectation of the test results and an accepted reference value. Read Full Definition, privacy, and the potential for misuse.

For instance, in Oklahoma City, community activist aurelius francisco has voiced concerns about the implications of AI in policing. He argues that AI-generated reports could exacerbate existing issues of racial bias and prejudice, particularly if the technology is developed by companies that also produce law enforcement tools like Tasers.

Francisco’s concerns are echoed by others who fear that AI could make it easier for police to engage in practices that disproportionately impact marginalized communities. The automation of report writing, while beneficial in reducing the time officers spend on paperwork, could also lead to less careful documentation and a lack of accountability.

The Future of AI-Generated Police Reports in Court

As AI-generated police reports become more common, the legal system will need to adapt. Currently, in Oklahoma City, the use of AI-generated reports is limited to minor incidents that do not result in arrests or serious charges. However, in other cities like Lafayette, Indiana, and Fort Collins, Colorado, officers have broader discretion to use AI for more serious cases.

Before AI-generated reports can be fully integrated into the criminal justice system, there must be robust safeguards to ensure their accuracyIn scientific and measurement contexts, "accuracy" refers to the degree of proximity or closeness between a measured value and the true or actual value of the measured quantity. Accuracy indicates how well a measurement reflects Read Full Definition and reliability. This includes clear guidelines on how these reports are to be used in court and ongoing research to address any potential biases embedded in the technology.

Furthermore, there is a need for public discourse on the ethical implications of AI in law enforcement. Legal scholars, such as Andrew Ferguson, emphasize the importance of scrutinizing the impact of AI on the justice system. They argue that while AI can reduce the burden of paperwork on officers, it also risks compromising the careful consideration that human officers typically apply when documenting their observations.

Conclusion: A Forensic Science Perspective on AI-Generated Police Reports

The introduction of AI-generated police reports represents a significant advancement in law enforcement, offering the potential for greater efficiency and accuracy in crime reporting. However, this technology also poses new challenges for the legal system, particularly in ensuring that these reports can withstand judicial scrutiny.

From a forensic science perspective, the key to successfully integrating AI into police work lies in maintaining a balance between technological innovation and human oversight. As the use of AI chatbots like Draft One becomes more widespread, it will be crucial for law enforcement agencies, legal professionals, and policymakers to work together to establish best practices and ethical standards that safeguard the integrity of the criminal justice process.